Will Generative AI Disrupt the Music Industry?

On new music generation tools, and will Generative AI ever match human creativity when it comes to music?

Fun fact: more than a third of my team members do music. This is an unusual number, considering we are all in Health Tech, doing software engineering. Most of us are amateurs and do it as a hobby. But some of them are actually pro musicians.

I think it has something to do with the type of people we hire. Health Tech is a tough domain to work in. It is a highly regulated, slow, conservative domain, with hard problems and no quick wins. This domain attracts a unique type of people - nobody finds themselves here by coincidence: you need to have deep passion for making a real difference in people’s lives. So it calls for people with high empathy. And perhaps higher empathy is connected to music in the human brain, somehow.

For years, my co-founder and I have been joking about starting a team band. Until one day, we did.

We play covers, just for fun.

I’m the drummer.

Recently, several Generative AI models and tools for music generation have emerged, and it looks like this could have the potential to disrupt the music industry. I wrote about the potential impact of Generative AI on the film industry in my previous post about Generative AI and the Hollywood Strike. Are we looking at a similar impact? Will Generative AI disrupt the music industry?

In this post I will share some early experiments and observations on recent music generation models, generating lyrics, notes, music tracks and songs.

Would the AI-generated music sound like one hundred other songs that you have heard before?

Creating New Music

When creating new music, you typically do not want to create more of the same, you want to create something that people have not heard before. Something unique and innovative, that triggers emotions in other humans, possibly something catchy. New riffs, new sub-genres, new sounds. Think about some music pieces that were just, well, kind of different when they first came out. Things like Smells like Teen Spirit by Nirvana, Let It Happen by Tame Impala, Sandstorm by Darude, or Love Nwantiti by CKay, are just a few diverse, not representative examples.

There’s also a lot of the creativity that comes from fusion of genres, potentially combined in an unexpected way. Examples that have become very common include classic violins in soft rock ballads or Middle Eastern beat in a pop/dance songs.

But the thing to remember here is that Generative AI is not really creative. It is repeating patterns it has seen before in training data. Would the AI-generated music sound like one hundred other songs that you have heard before?

Generating Lyrics and Notes

Generative AI can compose lyrics for songs, as well as suggest notes for the music. This does not mean that the generated music is good. Most of the lyrics I’ve seen generated so far were kind of a cringe.

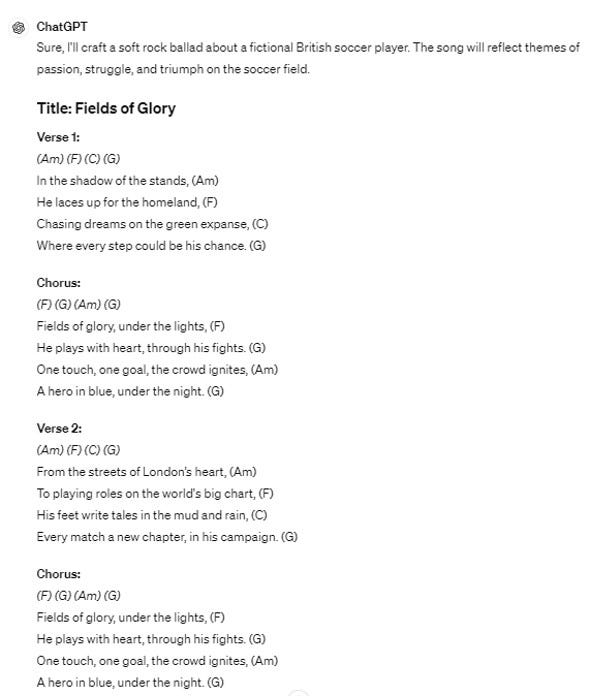

In a basic experiment, I prompted ChatGPT4 to produce lyrics and notes for a song about a British soccer player. It produced a structure, with a verse, chorus and a bridge. The lyrics look somewhat like a rock ballad parody:

It also provided detailed guitar chords:

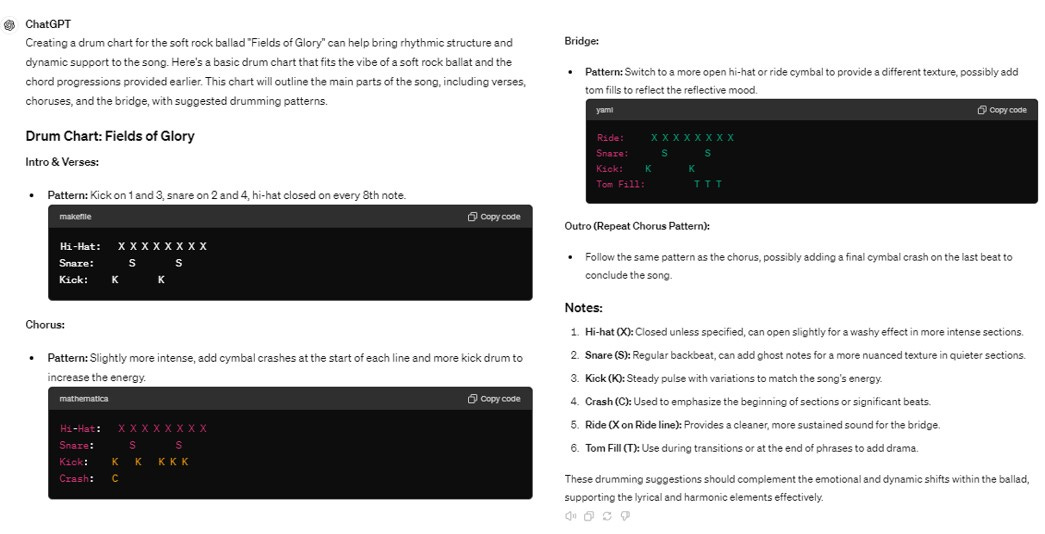

My live-in guitarist, aka hubs, played along to try it out. The chords made sense, but to put it bluntly, the result is just boring. Per my request, it also provided drum notes:

Very simple drumming, as basic as Mini Monster page 1, nothing interesting like flams or syncopated patterns. Makes sense – but again – boring.

Alright, let’s go into more advanced tools.

Music Generation Tools

Several music generation tools have emerged recently. I looked at a couple of them: a promising tool called Suno AI, and a new tool named Udio. There are more.

Music generation models typically work by training on large datasets that contain both music and corresponding lyrics.

The tools allow you to prompt for different genres, you can even specify what musical instruments you want to include. You can bring your own lyrics, or they can generate lyrics for you based on a description of the theme in the prompt. I will share some conclusions here.

The New Song Experiment

To examine the tools, I used lyrics I wrote more than a year ago after a pro-democracy rally – a specific rally that was later referred to as the Umbrellas Demonstration. The original lyrics were in my native language, and I named it “Sea of Umbrellas”.

I translated the lyrics into English (song translation with ChatGPT doesn’t work well, don’t get me started – see my previous post on Generative AI and Translation).

Then I tried the music generation tools Suno AI and Udio for this song - with and without providing the lyrics, in English and in my native language, generating couple dozen results in each tool.

Most results from Suno AI and Udio were not great. While both tools are impressive and the tech is cool, my key observation was that the music felt artificial and did not trigger any emotion for me.

I’ll admit - I did hear some generated music that was really funny. People turning user manuals or just silly texts into songs in various genres. One of those is an imaginary Brazilian singer from the 70’s, performing super funny, silly songs that were generated by Udio and have recently gone viral. Well, you can argue that funny is a valid emotion.

The music felt artificial and did not trigger any emotion for me.

Udio felt buggy, results sounded very synthetic, and the tool pushed genres I did not prompt for into the result - hey Udio people, if you guys are listening – when I ask for a soft rock ballad, I certainly do not expect to get glam metal!

Here is one example from Udio:

As for Suno AI, most of the results were either boring or just not a great fit for the lyrics. While it tries to create a structure to the song, it often does not sit well, especially when providing lyrics. Some of the results sounded plastic. Some sounded like other things I heard before.

One result from Suno AI did make sense to me as something I can work with.

However, one result from Suno AI did make sense to me as something I can work with. It wasn’t bad, and even had a Coldplay-like solo in it. It was enough for me to take it into a music class and work with it as a starting point. And so, I created this new song, called “Rebels in the Rain”, based on my original “Sea of Umbrellas”, using some suggestions from Suno AI and then taking it in my own direction. The originally generated result is below, lyrics are at the end of the post. I will be playing my revised version of this song with one of my bands in the upcoming weeks, so follow my Instagram for a new reel coming soon.

What do you make of all of this?

Music generation tech is not a substitute for human creativity. The tech is cool. But the results have no soul to them.

Sure, if you need to create elevator music or music for ambiance or even background music for some tech demo clip, then those music generation tools are fine. You could also use them to generate some basic ideas to start with, like my experiment above. It is helpful. But it will not generate the next big hit without human intervention.

Generative AI is good at synthesizing and combining existing art, so for us as consumers, this would mean synthetic music that would feel like one hundred other songs that we have heard before. No disruption, no innovation.

Moreover, I think this will increase the importance of live music. Synthetic music will not be enough. People will want to see live shows, watch bands playing music.

The conclusion here is that creation and performance of music will continue to be done by humans, but humans will likely start using Generative AI as a supportive tool. We call those Generative AI-based supportive tools that assist humans Copilots.

Rebels in the Rain Written by: Hadas Bitran and Suno AI [Verse] In the dark night In the pouring rain You and I Standing side by side In a sea of umbrellas Hand in hand we stand [Verse 2] In a large crowd Nowhere else to go Raise our voice Standing for our home In a sea of umbrellas In the pouring rain [Bridge] Hand in hand we stand Rebels in the rain [Chorus] We won't back down We won't be silent We’re protecting our home We know we’re not alone United through the pain We will never be the same Together we'll remain Rebels in the rain

About Verge of Singularity.

About me: Real person. Opinions are my own. Blog posts are not generated by AI.

See more here.

LinkedIn: https://www.linkedin.com/in/hadas-bitran/

X: @hadasbitran

Instagram: @hadasbitran

Recent posts:

Like many other domains, time will tell, live music that has variations and improvisations might be a challenging act to follow for AI, but is there a tech barrier (given the needed resources) to write good lyrics ? Or good harmonies that sound good ? Lets say spotify wanted to experiment with generation of music and let the public consider what sound good ... music, today is already being manipulated and fixed by sound engineers , the human soul and emotion in music can not be replaced by machines but they might present a decent imitation at some point in the future?.

Took a long time and a small push from the corporate in the form of an internal human<->AI music collab contest - here's our team band performing "Rebels in the Rain", human-enhanced version: https://www.instagram.com/reel/DMdtU_Zo_kn/?utm_source=ig_web_copy_link&igsh=MWU4bGI2bXRuNGYwaw==

Yes, some people have strange hobbies.