Generative AI and the Hollywood Strike

The potential impact of Generative AI on the film industry: Generative AI core concepts, issues and blind spots in the context of the SAG-AFTRA/WGA strike and ways to approach some of the gaps.

A very different blog post from my usual ones, on a topic that is not as casual as it may seem.

The British actor I followed on social media was not posting anything for a while. That seemed odd considering he just had a new film released. Moreover, it seemed that no one in Hollywood was promoting their films last summer. This has raised awareness to the Hollywood SAG-AFTRA strike, and the WGA strike that took place around the same time.

Both strikes were about improved pay and working conditions. One of the key topics that played a central role in the SAG-AFTRA strike was the increasing use of Artificial Intelligence in the entertainment industry.

Artificial Intelligence is my day-to-day work, so this got me curious. What’s going on?

In this article I will explain core concepts in Generative AI in simple terms, with examples, potential impact, things Hollywood needs to be aware of, and some ways to approach gaps.

And so, I dived in. Got so much to say.

In this article I will explain core concepts in Generative AI in simple terms, discuss the potential impact on the film industry through examples, flag issues, and share ways to approach some of the gaps.

This may appear like a casual topic given everything that is going on in the world right now. But it is not at all casual. This is about an industry going through a paradigm shift, about people potentially losing their jobs, and above all, about individuals’ rights to own their identity.

For those of you who are not familiar with the Hollywood strike, here’s a short background on this topic (if you know all about it, skip to next paragraph).

The SAG-AFTRA and WGA strikes

The American actors' union SAG-AFTRA (Screen Actors Guild – American Federation of Television and Radio Artists) was on strike from July to early November. Shortly prior to that, The Writers Guild of America (WGA) went on strike, from May until the end of September. Both strikes were over a labor dispute with the Alliance of Motion Picture and Television Producers (AMPTP). WGA represents 11,500 screenwriters. SAG-AFTRA represents over 160,000 media professionals worldwide, including actors, broadcast journalists, dancers, news writers and editors, recording artists, singers, stunt performers, voiceover artists, and others.

AI was a key issue, and even more specifically, the use of Generative AI, in the entertainment industry. In an era where AI can be used to replicate human appearances and voices, actors and performers were concerned that studios would use AI to scan their appearance and voice performances without their approval or compensation, creating digital replicas of them and using those in new productions.

The SAG-AFTRA strike achieved an agreement that aims to protect an actor's likeness and intellectual property rights. The agreement puts restrictions on the use of AI and ensures that producers would get the approval of the actors before scanning and reusing their performances - and compensate the performers for that.

The WGA strike achieved an agreement that allows writers to make use of AI without undermining their credit or compensation. The writers did not get one of their key demands on AI, a provision forbidding the studios from training AI systems on screenwriters’ work.

So, there are agreements in place, everything should be fine, right?

Right??

Well, to understand some of the issues, gaps, and their implications, let’s first get some technical background.

Generative AI and Training

Generative AI is a type of artificial intelligence that can create new content. Generative AI uses a lot of data it has seen before, like millions of pictures or texts, and learns from them to make new ones. It’s not just copying what it has seen – it is learning patterns and styles to create something new.

This process is called Training. Training an AI model means you show it a very large number of examples, and the model learns from these examples. The more examples it sees, the better it gets. Pre-trained generative AI models refer to model that have already been trained on a large dataset to learn certain patterns, structures, or features before they are fine-tuned for specific tasks.

Fine-tuning is a technique where AI models are customized to perform specific tasks or behaviors. It involves taking an existing model that has already been pre-trained and adapting it to a narrower subject or a more focused goal. For example, a generic pre-trained model that can generate natural language texts can be fine-tuned to produce specific type of text by providing relevant examples.

Generative AI can be used to create new content of a wide range of types - we sometimes call those modalities - from generating text for a story or a screenplay, to creating new pictures, generating speech, video, or even creating new music. Some Generative AI models work on multiple modalities at the same time.

Let’s look at some of the art of the possible, mainly through examples:

Text generation and its impact on Screenwriting

With ChatGPT embarking into our lives recently, text generation has become the most popular form of Generative AI. Some pre-trained models in this area include GPT4, powering ChatGPT by OpenAI, LaMDA powering Google Bard, the Llama open source by Meta AI, and more.

One of the interesting things about Generative AI is that it can adapt to a certain writing style or tone. For example, you could ask it to create a screenplay that follows the style of another screenplay or create a script for a certain scene in a certain tone or atmosphere. Not saying that the generated screenplay would be good enough or even an interesting one, but results are sometimes surprising.

To support the explanations in this blog post with examples, I created an experiment, asking GPT4 to generate a screenplay for me, using my own story told in one of my earlier blog posts (see link below) about the White House clinical trials project.

I increased the Temperature for creativity, used Prompt Engineering to allow the model to slightly deviate from the original story to make things more interesting, and even allowed the model to use some profanities (may the Gods of Corporate PC mercy my soul). The result was kind of surprising, not great, but it did generate an initial screenplay. For the film name, it suggested “Code of Hope”. Oh well.

I then asked the model to produce a script for specific acts:

For the sake of this exercise, I synthesized some mock examples of other screenplays and asked ChatGPT-4 to create the screenplay using also those examples.

The technique of selecting the most relevant examples to learn from in real time is sometimes referred to as dynamic few-shot.

This exercise is especially interesting in the context of the WGA agreement, and the discussion around limitations on training on screenwriters’ work. To spell this out, screenwriters should keep in mind that their prior screenplays could technically be used to produce new content in the same tone and style - not just new scenes for an existing screenplay, but also totally new screenplays for other stories. It is not just about training, but also about fine-tuning and learning from examples.

Voice generation

Voice Generation tools typically convert written text into natural-sounding speech audio. They take input in the form of text and generate high-quality speech audio output. This is called Text-to-Speech. Some known models and tools in this area include Microsoft Azure Text to Speech, Google’s WaveNet and Tacotron, Voicebox by Meta AI, Overdub and Lyrebird from Descript, and more.

One of the interesting voice generation features is that it lets you create a customized, synthetic voice by providing your own audio data of selected voice talents. This means you can build a voice for a character by providing studio-recorded high-quality human speech samples as training data, and create a realistic-sounding voice, saying whatever you want it to say. The generated voice can be used for voiceover in ads, films, games, or any type of narration.

And one of the coolest things is that some of those tools allow adapting the talent’s voice to speaking across languages, without the need for additional language-specific training data. It means that based on the English samples, you can generate speech in other languages.

What does this mean? It means that, for example, in theory you could narrate the entire Tokyo subway system, in Japanese, using any voice you like - be it Morgan Freeman, Taylor Swift, or the said Brit - just based on their voice samples in English, if they were ever to agree to that. Again, theoretically.

This technology raises questions about the future of foreign language dubbing. Depending on the quality of the outcome, and overcoming potential accent issues, this could have a big impact on the dubbing profession and how localization of movies to foreign languages is being done – it could very well be that the audience would prefer to hear the original actor’s voice speaking the foreign language rather than someone else’s voice dubbing the film. As for voice actors in animated movies, the future of dubbing might be different, but could still be impacted.

We will not get into the topic of language translation here – AI-based translation is super interesting by itself. Separate blog post on translations will come soon.

The question remains what happens if the voice is generated based on the talent’s voice samples without their actual participation, consent, and compensation. This is why the recent announcement of the SAG-AFTRA and Replica Studios AI Voice Agreement, aiming to safely create and license a digital replica of voice talents to be used in video games and other media, is super interesting. But it is not enough.

This is also why the provisions related to association of samples to specific productions and projects are important. We’ll get back to that in a minute to talk about what’s actionable here and how this can potentially be enforced.

There’s also a lot to be said about other forms of audio generation, including music and sound generation. Music generation extends beyond films and can impact the entire music industry. This topic is close to heart for me, so will do separate post soon.

Image Generation

Image generation is an area of fast-paced evolution with tools and models like DALL-E from OpenAI, Midjourney, Stable Diffusion from Stability AI, Adobe Firefly, and more.

Generative AI in this area can help generate concept art, storyboards, and media that is related to the film, and assist in graphic design. Generative AI can make it easier for filmmakers to create realistic visual effects, backgrounds, and even characters. It can assist in pre-visualization, helping directors and cinematographers to explore their vision before actual filming. Similar to text and voice samples, you can provide image samples to fine-tune the image generation, for example for what the characters on the image should look like.

To continue the experiment above, here’s what happened when I asked Generative AI to create a film poster for “Code of Hope”, our theoretical film about the White House clinical trials story.

As often seen in AI-generated imagery, it is far from being perfect. Another phenomena, which seems like gender bias was this: when prompting for the image, I described each character in detail and made it clear that 2 of the 3 computer scientists were female, however it was very hard to get the model to create an image that included 2 female computer scientists – it kept on coming up with male scientists. Not cool.

And for completeness of this exercise, I asked DALL-E to generate a storyboard for our theoretical film. The AI suggested key scenes and then broke them into frames. Here are some of the frames generated, for 2 of the scenes.

Video Generation

Video generation is probably one of the more complicated areas in the context of this discussion. Video generation tools vary in their functionality and technology. This includes video editing tools that use AI to speed up the editing process, generative text-to-video apps that take prompts – textual descriptions for the scene the user asks to create - and generate a video output.

AI-based video generation is supported by models and tools like Descript, Synthesia, Imagen, Stable Video Diffusion, Rephrase.ai that was recently acquired by Adobe, Emu from Meta, Lumiere AI that was launched by Google this week, and more.

Deepfake is another video generation technology – a very different one, and perhaps the most daunting of them all. This technology swaps faces and voices in videos, and is used to produce fake media. This topic deserves its own post, coming soon.

Video generation technology is not fully there yet. At the time of writing this, various tools are popping up that generate videos of a few seconds for a specific scene. Those tools typically combine existing visuals or complete missing parts. In some cases, those are tools that take in a picture and produce a video that adds additional frames with some movement from the original picture.

Got to say, those videos remind me of the animated images on the Daily Prophet newspaper from the Harry Potter films.

But video generation technology is evolving fast and has huge potential.

Generative AI can contribute to creating more sophisticated visual effects in films. It could generate digital characters, opening up new possibilities, such as post-death performances, as seen with Carrie Fisher in "Star Wars: The Rise of Skywalker" in 2019, or younger versions of actors, as seen with young Luke Skywalker appearing in Season 2 finale of The Mandalorian – told you people, the Star Wars universe is an inspiration. It could potentially also allow the personalization of films to viewer preferences, with AI changing certain things like plot or endings, or even product ads in real-time. Video generation technology can assist in creating character animations, and even entire environments. It can further enhance the ability to create scenes that would otherwise be risky, and this may redefine the role of stunts in films.

Video generation can also be used to alter a film during post-production. This raises ethical questions, such as what happens if substantial changes are made that an actor might not have signed up for originally, such as the inclusion of hate speech or nudity. Or taking it to the extreme, what if an entire sequel is produced using the actor’s AI replica? Beyond compensation aspects, this could potentially be misaligned with the actor's professional standards or negatively affect their portrayal of a character, thereby damaging their professional reputation.

This is troubling as it touches the topic of actors owning their likeness and identity. It also leads to growing concerns over authenticity of content. And not even starting to talk about malicious entities.

Few words on casting

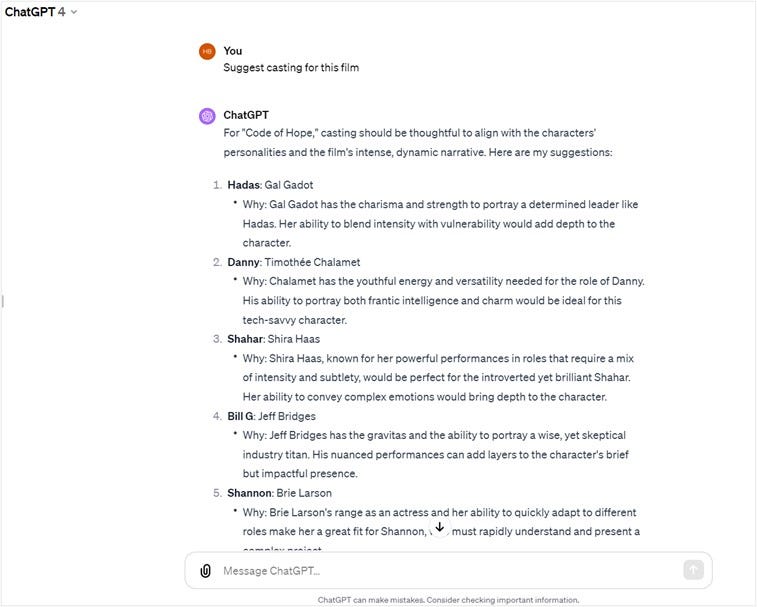

To finalize the experiment, I asked the AI to provide some casting suggestions for the above theoretical film about the White House adventure. ChatGPT4 suggested this:

Got to say I was pleased with the choice of Gal Gadot and Shira Haas, had someone else in mind for the Danny character, but in general, not sure how the combination for those characters would work together. But how on earth would AI understand human chemistry?

Moreover, since the AI is merely repeating patterns it has seen before, it looks like casting suggested would always be more of the same, rather than new, unconventional choices. This also means that if it was up to the AI, actors would be doing similar roles over and over again. But diversity is much more interesting.

Perhaps most intriguing of them all was the casting suggestion for the Bill G character. GPT4 suggested many options, from Jeff Bridges to Anthony Hopkins. I asked again and again, in different ways. But it did not come up with the obvious answer – well, at least the answer that was painfully obvious to me.

People, the only right answer for this casting decision is a cameo performance. No other person in the world could perform this role as good as Bill G himself. How realistic would that be? Well, remember, this is a theoretical film.

Association of data with specific productions or projects

So, what else is actionable here? Here’s another one:

SAG-AFTRA reached an agreement with the studios, that would allow studios to use AI-generated content based on the performer’s likeness within the same project. That makes sense. But how do you make sure data remains within the boundaries of the project?

The Watermarking concept and its importance were covered in one of my previous blog posts on AI regulation (see link below). In this context, the emerging regulation looks to enforce watermarking of AI generated artifacts. This would include images, speech and videos that are generated by AI.

According to the recent Biden Executive Order on AI, forcing AI generated content to be watermarked will be a must. But this is not enough. What’s needed here is an agreement with the studios to expand the industry’s watermarking standards, to include identity of studio, as well as the name of the specific project, and that watermarking should be applied both to the original content material that was used for training or fine-tuning, as well as on the AI generated content - this could help track and reduce potential leakage between projects.

What does it all mean?

Those were just a few examples, aiming to demonstrate the impact Generative AI can have on the film industry.

While AI offers many opportunities to assist creative work, it also poses challenges related to employment, creative rights, and the nature of art itself. The industry will need to adapt by balancing the benefits of AI with the irreplaceable elements of human creativity, storytelling and acting.

Some of this is disheartening. Will Generative AI replace the role of humans in this process? And doesn’t owning the performer’s images, videos, and voice, and being able to re-use them at will take over the performer’s identity?

Some people speculate a dystopic future where technology would enable consumers to request the AI to generate new films for them with specific actors, plot, and location, all customized to their personal preferences. I’m going to take the risk of famous-last-words here and say that this is very far-fetched.

Because it completely ignores aspects of IP rights of existing work. And because it completely ignores the fact that Generative AI is not really creative – it is repeating patterns it has seen before, as I was trying to demonstrate throughout the examples in this post. And we need to remember that AI is only as good as the data it is being fed. Generative AI is good at synthesizing and combining existing art, so for us as consumers, this would mean a future of synthetic films that would feel like one hundred other films that you have seen before.

My prediction: Generative AI will evolve as assistive technology to humans in the film industry. We call those assistive technologies Copilots.

But humans will continue to drive the creative work. Humans that innovate, that see things differently, that think out-of-the-box, imagine new worlds, invent new paradigms, create new works of art, tell stories that were never told before, and inspire people all around the world. Humans that put their soul into each character they bring to life.

Recent posts:

About me: Real person. Opinions are my own. See more about me here.

About Verge of Singularity.

LinkedIn: https://www.linkedin.com/in/hadas-bitran/

X: @hadasbitran

Instagram: @hadasbitran