Evaluating Quality of AI in Healthcare

About ground truth, quality evaluation, industry benchmarks, overfitting, the role of clinical experts in healthcare AI, and the story of one special data set.

Recently, OpenAI released HealthBench, a benchmark designed to evaluate how well AI models perform when answering healthcare questions. HealthBench includes a set of medical questions and criteria for scoring models based on their responses.

Evaluation is critical to verify the performance of AI systems. And when we talk about performance of an AI model, we’re talking quality. As in - is the answer correct and complete. And in healthcare, as you know, quality is critical.

Before we dive into benchmarks, let’s cover a few basic concepts around performance evaluation of AI models (skip ahead to Non-deterministic outputs if this is too basic).

Evaluation 101

How do you evaluate the performance of AI models?

Ground Truth

Evaluation requires a reference point: ground truth. Ground truth is the authoritative set of answers which the results of the AI model are compared against. But ground truth is contextual: ground truth needs to be specific to the task the model is supposed to accomplish. So, for example (somewhat over-simplified):

For a model that associates ICD-10 codes with a clinical note: a verified list of clinical codes associated with the note

For a medical chat: the clinically-validated response

For a trial-matching AI: eligibility of the patient as defined by the trial protocol

Ground truth doesn’t fall from the sky. It requires clinical experts - doctors, nurses, radiologists and subject-matter experts who annotate, validate, and sometimes even argue about the “right” answer. Sometimes, the “right” answer is just what happened in reality, for example, was the patient eventually diagnosed with the disease that an AI model predicted. Associating an answer with the data in the context of the task for the sake of creating ground truth is generally referred to as labelling or annotation.

In this era of AI in healthcare, the need for clinical experts for producing annotated data and ground truth becomes even more critical. My team relies on physicians and nurses not only to annotate data for ground truth, but also to support the evaluation process and validate the outputs. Without them, our products would be meaningless.

We rely on physicians and nurses to annotate data for ground truth, support the evaluation process and validate outputs.

Measuring Quality

To measure quality, we need metrics to measure the model results against the ground truth.

Let’s take for example an AI model that tries to predict whether a patient has a disease. Let’s assume that reality gives us the “truth” (does the patient actually have the disease?), and the model gives us a prediction (does the model say they have the disease?). Now, there are 4 possible outcomes:

True Positive (TP): The patient has the disease, and the model correctly predicts they have it. (Correct catch)

False Positive (FP): The patient does not have the disease, but the model incorrectly predicts they do. (False alarm)

True Negative (TN): The patient does not have the disease, and the model correctly predicts they don’t. (Correct reassurance)

False Negative (FN): The patient does have the disease, but the model fails to detect it. (Missed diagnosis!)

We now count the results of all our test cases, to measure quality:

Precision: Of the cases flagged as positive, how many were truly positive?

Recall: Of all the true positives, how many did we actually catch?

F1 score: The harmonic mean of Precision and Recall.

In medicine, slightly different terminology is used:

Sensitivity (similar to Recall): Ability to correctly identify patients with the condition.

Specificity (not the same as Precision): Ability to correctly exclude those without the condition.

How those are calculated:

Precision = TP / (TP + FP)

Of all the patients flagged as sick, how many were actually sick?

High precision = fewer false alarms.Recall (==Sensitivity in medicine) = TP / (TP + FN)

Of all the sick patients, how many did we correctly catch?

High recall = fewer missed cases.Specificity = TN / (TN + FP)

Of all the healthy patients, how many did we correctly identify as healthy?

High specificity = few false alarms on healthy patients.F1 Score = 2 × (Precision × Recall) / (Precision + Recall)

Balanced score to make sure you’re not optimizing for one metric while tanking the other.

Evaluation vs. Training - and Overfitting

There is a big difference between training and evaluation:

Training models involves feeding the model with data so it can learn patterns. So that’s the training set. Evaluation means testing the model on data the model did not see before, to see if the model is capable of generalizing properly. That’s the test set.

Evaluating your model on your training set is kinda cheating, because it means the model can just memorize those examples. This is why when you have a data set, you would typically hold part of it away from your training set. 25% is common.

This held-out set can then be used for evaluation as part of your test set.

Another phenomena to mention here is overfitting, which happens when a model learns the training data too perfectly, including random noise and irrelevant patterns, instead of learning the real underlying patterns. This could also happen when your training data is not diverse enough, or just not big enough.

Overfitted models will give excellent results on data that looks like things they have already seen, but once they face something new, they fall apart, because they are not able to generalize.

Overfitting is when a model learns the training data too perfectly, including random noise and irrelevant patterns, instead of learning the real underlying patterns.

Evaluation in an era of non-deterministic outputs

With large language models, often the output of the model can vary. Meaning, if you ask the exact same question twice, you might receive two different answers that may be phrased differently, but both would still be correct. How do you compare that to the ground truth?

In the past you would simply do textual comparison of the output against the ground truth. But now, methods like ROUGE-L that rely on textual comparison become less relevant. You now need to compare the clinical essence rather than string overlap.

Several approaches there. Some approaches rely on small language models (SLM) that are specific to the task of extracting clinical essence, like the HALOM safeguard we discussed in one of my blog posts on Why AI Hallucinates. Other approaches rely on asking an LLM whether two answers have the same clinical meaning, which is an approach we are starting to see in industry benchmarks.

Industry Benchmarks

So, what do we mean by benchmarking? And how is it different from evaluation?

As explained above, Evaluation refers to assessing how well a model performs on a given task. It typically focuses on your model, your test set, and the ground truth produced for that test set, given the AI task you want to evaluate.

Benchmarking is about comparing the model performance against a standardized reference. Industry benchmarks typically come with a data set and a pre-defined ground truth, for a particular task, and are used to compare against other models.

The catch is, however, in the details: what data set is being used in the benchmark, what is the ground truth and how it is annotated, for what tasks does the benchmark provide results, and how exactly are the model outputs compared against that ground truth. Which can often introduce biases.

Simple example: if your model associates ICD10 codes with a clinical note, it makes a huge difference whether the benchmark is annotated for entity linking or actual coding for reimbursement, whether the ground truth was produced by an actual clinical coding expert, and whether the input includes the patient history or not.

Few industry benchmarks have emerged, for different tasks and different frameworks, each one with their own strengths and weaknesses. Won’t cover them all here, will just refer to a couple of them.

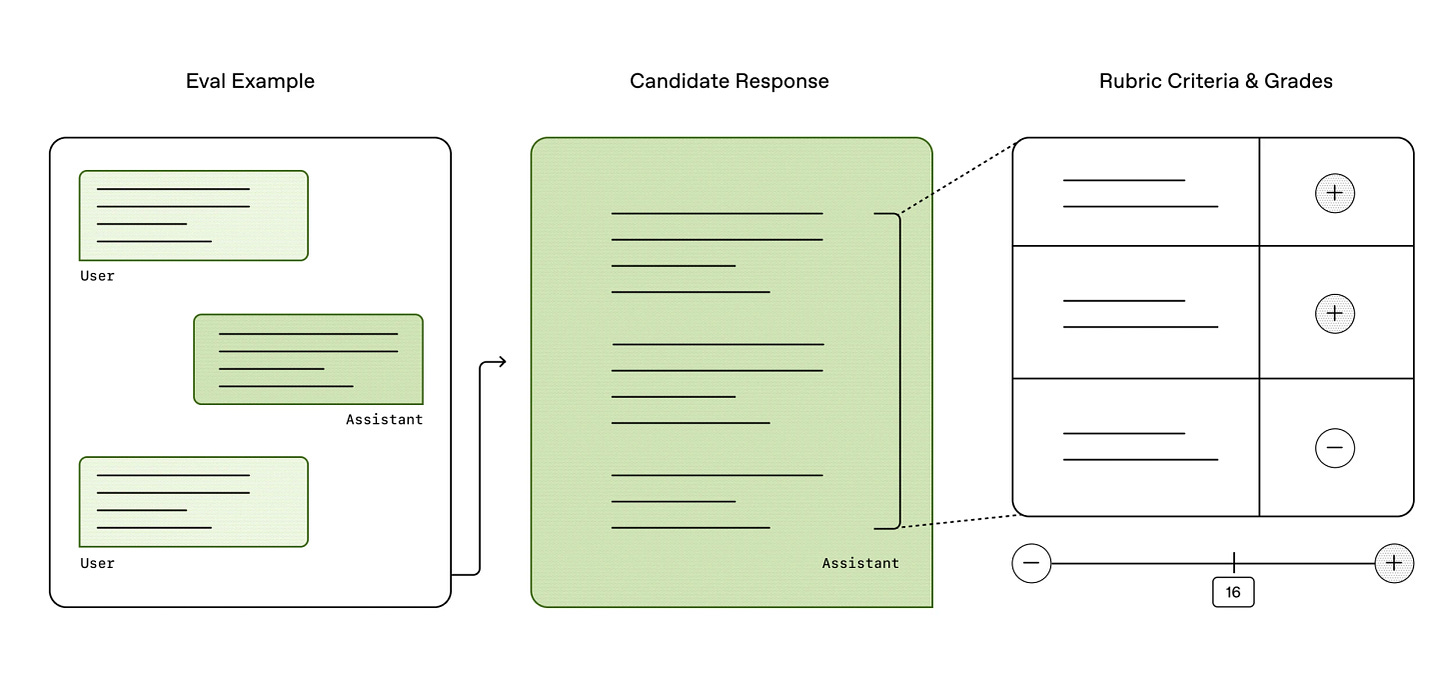

HealthBench is a benchmark that was recently released by OpenAI. It is designed to assess AI models in healthcare conversations. It includes ~5000 medical questions in multiple languages created by clinicians and it also includes a grading mechanism. Model responses are graded against multiple rubric criteria, in 5 different axes and various healthcare themes.

While HealthBench is impressive, I have a lot to say about it, and its blunt gaps. For example, there are critical things that it does not measure at all. Groundedness is missing from its judging criteria axes, and it gives zero f’s to whether or not the model provided supportive evidence for its answers. Like, really?

More issues. Gaps in the HealthBench themes cause bias for systems intended for specific personas. There is a big difference between the nature of answers you would expect from a system that is intended to serve patients vs. a system intended for doctors. I’ve ranted about personas in one of my previous blog posts about Inception of Healthcare Agents. Those kind of gaps are ignoring critical aspects while impacting the scores.

Even industry benchmarks have biases - performance depends on how it is being measured, and what data is used - which depends on how the benchmark was designed.

Not all bad, though. With some adaptation we were able to leverage some of HealthBench and make it useful. But the key learning here is that even industry benchmarks have biases, and that performance depends on how it is being measured, which depends on how the benchmark was designed.

Another example of an industry benchmark is MedHELM, an evaluation framework designed to assess the performance of LLMs in medical tasks. It was developed in collaboration between Stanford, Microsoft Health & Life Sciences, and others. MedHELM covers diverse healthcare scenarios like diagnostics, patient communication, and more. So, not just healthcare conversations.

A word on leaderboards. Many benchmarks publish a leaderboard of models and how they rank. Sounds fun, but it’s not always the full story. For example, at the time of writing this post, DeepSeek R1 is at the top of the MedHELM board. Ugh.

Leaderboards may feed competitive egos, but they don’t tell you everything you need to know on whether a model is actually suitable for your clinical use case.

Story Time: Synthetic Patients Data

Let’s end with a story from the trenches, you know it’s been a while.

This is the story of the Tehila data set, an internal data set we created and curated over time to evaluate the performance of our AI models.

It all started with our clinical trials matching system, Project New Hope. I shared the unusual story of that project in my blog post on GenAI and Clinical Trials. This was way before the Generative AI era, and the model was about matching patients to clinical trials, based on the patient data and the trials eligibility criteria.

To evaluate whether the system was producing the right results, we needed ground truth on the clinical trials matching task: eligibility criteria against patient data.

Evaluating the performance of an AI model needs to be specific to the task that the model is supposed to accomplish.

We needed to constantly measure the performance of the system, on any change we made, meaning we needed to minimize noise of other factors, to allow consistent evaluation. So, we took the database of clinical trials from clinicaltrials.gov and froze it in time, and then had medical doctors match a set of patients to those trials according to the eligibility criteria, as our ground truth. We then set an automated pipeline that compared the results of the system to the ground truth on every change we pushed.

So where did that set of patients come from, you might ask?

Initially, the only data we had was from that White House challenge of Project New Hope, that included about a dozen trial IDs matched against few dozens of anonymized patient records.

Then, a patient named Sharona (pseudonym) heard about the project and reached out. She had stage 4 breast cancer and asked for help to find clinical trials that could be a match for her, giving her consent to sharing her medical data with us. Sadly, Sharona passed away a few months after that.

We needed more patient data, and how those patients matched to clinical trials based on eligibility criteria. So, we started to build a corpus of synthetic patients.

Initially, the synthetic data set had few legendary characters, like Patrick who suffered from stage 4 pancreatic cancer, Carrie who had a cardiac arrest, and Kurt who suffered from clinical depression. Yeah, Gen X-ers in the house.

Then, at some point, it included synthetic patients named Dumbledore, Hermione and Lucius Malfoy - with some fake medical conditions, created by Dr. Eldar Cohen, a talented MD and a proud Gryffindor.

But it was a Medical Doctor named Dr. Tehila Fisher-Yosef, who was on a fellowship in our team as part of the 8400 Health Tech Fellowship program, who created a full data set of imaginary patients, with made-up clinical notes for those fake patients, giving the data set its name, the Tehila Data Set.

The data set has evolved over the years, we cleaned it up and added diverse types of data in multiple languages, for various tasks.

We are still using some of this data set today. Sharona is still there.

About Verge of Singularity.

About me: Real person. Opinions are my own. Blog posts are not generated by AI.

See more here.

LinkedIn: https://www.linkedin.com/in/hadas-bitran/

X: @hadasbitran

Instagram: @hadasbitran

Recent posts:

I learned so much for this article, super helpful - thank you!

This is a technical rabbit hole. However, this is a rabbit hole we all need to understand in order to trust #AIinHealthcare. Great piece, thank you Hadas!